Which is the main entry point for all streamingįunctionality.

#Integration guide code

The complete code can be found in the Spark Streaming example awaitTermination () // Wait for the computation to terminate To start the processingĪfter all the transformations have been setup, we finally call ssc. Will perform when it is started, and no real processing has started yet. Note that when these lines are executed, Spark Streaming only sets up the computation it The words DStream is further mapped (one-to-one transformation) to a DStream of (word,ġ) pairs, which is then reduced to get the frequency of words in each batch of data.įinally, wordCounts.print() will print a few of the counts generated every second. reduceByKey ( _ + _ ) // Print the first ten elements of each RDD generated in this DStream to the console wordCounts. map ( word => ( word, 1 )) val wordCounts = pairs. import .StreamingContext._ // not necessary since Spark 1.3 // Count each word in each batch val pairs = words. In this case,Įach line will be split into multiple words and the stream of words is represented as the Generating multiple new records from each record in the source DStream. split ( " " ))įlatMap is a one-to-many DStream operation that creates a new DStream by Split each line into words val words = lines. Each record in this DStream is a line of text. This lines DStream represents the stream of data that will be received from the data Create a DStream that will connect to hostname:port, like localhost:9999 val lines = ssc. Using this context, we can create a DStream that represents streaming data from a TCP setAppName ( "NetworkWordCount" ) val ssc = new StreamingContext ( conf, Seconds ( 1 )) The master requires 2 cores to prevent a starvation scenario. import ._ import ._ import .StreamingContext._ // not necessary since Spark 1.3 // Create a local StreamingContext with two working thread and batch interval of 1 second. We create a local StreamingContext with two execution threads, and a batch interval of 1 second.

Main entry point for all streaming functionality. Let’s say we want toĬount the number of words in text data received from a data server listening on a TCPįirst, we import the names of the Spark Streaming classes and some implicitĬonversions from StreamingContext into our environment in order to add useful methods to Let’s take a quick look at what a simple Spark Streaming program looks like.

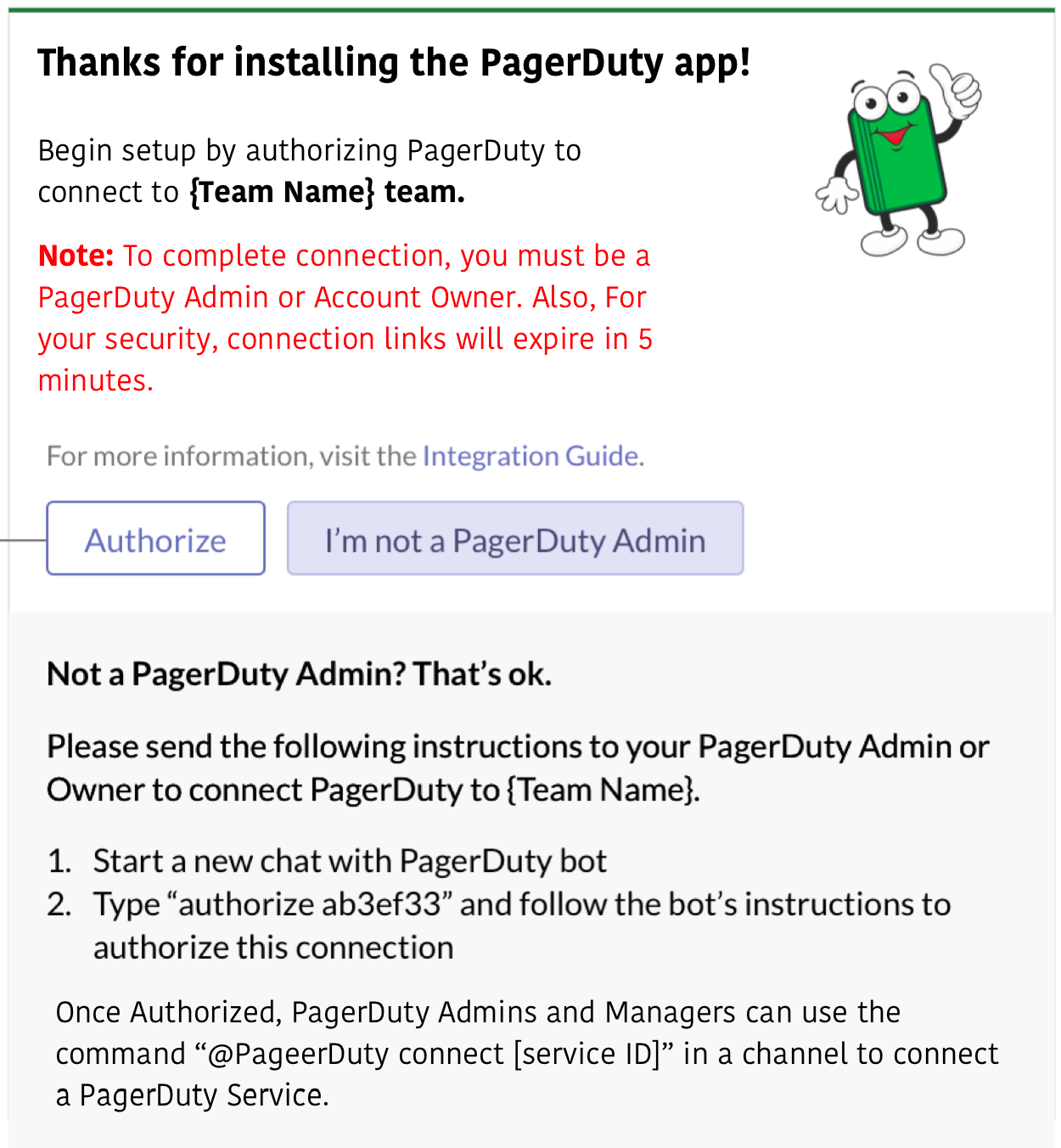

#Integration guide how to

Throughout this guide, you will find the tag Python API highlighting these differences.īefore we go into the details of how to write your own Spark Streaming program, Note: There are a few APIs that are either different or not available in Python. You will find tabs throughout this guide that let you choose between code snippets of Write Spark Streaming programs in Scala, Java or Python (introduced in Spark 1.2),Īll of which are presented in this guide. This guide shows you how to start writing Spark Streaming programs with DStreams. Internally, a DStream is represented as a sequence of Streams from sources such as Kafka, and Kinesis, or by applying high-level DStreams can be created either from input data Which represents a continuous stream of data. Spark Streaming provides a high-level abstraction called discretized stream or DStream, The data into batches, which are then processed by the Spark engine to generate the final Spark Streaming receives live input data streams and divides Graph processing algorithms on data streams. Like Kafka, Kinesis, or TCP sockets, and can be processed using complexĪlgorithms expressed with high-level functions like map, reduce, join and window.įinally, processed data can be pushed out to filesystems, databases,Īnd live dashboards. Spark Streaming is an extension of the core Spark API that enables scalable, high-throughput,įault-tolerant stream processing of live data streams.

0 kommentar(er)

0 kommentar(er)